Machine Learning Infos in AI4(M)S Papers

Update Time: 2025-10-06 12:59:10

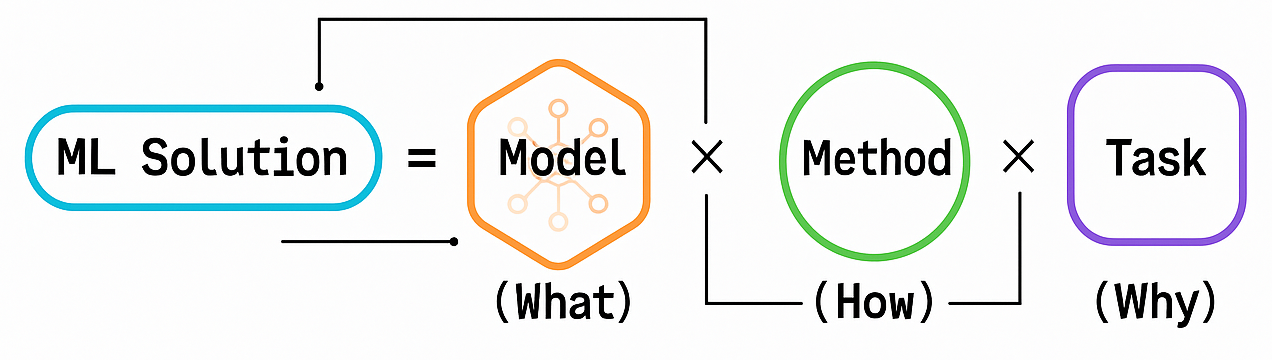

🌳 Machine Learning Taxonomy

🎯 Table 1: Tasks (What to Solve) [16 Categories → 91 Specifics]

| Category | Items |

|---|---|

| Prediction Tasks | Regression, Classification, Binary Classification, Multi-class Classification, Multi-label Classification, Ordinal Regression, Time Series Forecasting, Survival Analysis |

| Ranking and Retrieval | Ranking, Information Retrieval, Recommendation, Collaborative Filtering, Content-Based Filtering |

| Clustering and Grouping | Clustering, Community Detection, Grouping |

| Dimensionality Reduction | Dimensionality Reduction, Feature Selection, Feature Extraction |

| Anomaly and Outlier | Anomaly Detection, Outlier Detection, Novelty Detection, Fraud Detection |

| Density and Distribution | Density Estimation, Distribution Estimation |

| Structured Prediction | Structured Prediction, Sequence Labeling, Named Entity Recognition, Part-of-Speech Tagging, Sequence-to-Sequence |

| Computer Vision Tasks | Image Classification, Object Detection, Object Localization, Semantic Segmentation, Instance Segmentation, Panoptic Segmentation, Pose Estimation, Action Recognition, Video Classification, Optical Flow Estimation, Depth Estimation, Image Super-Resolution, Image Denoising, Image Inpainting, Style Transfer, Image-to-Image Translation, Image Generation, Video Generation |

| Natural Language Processing Tasks | Language Modeling, Text Classification, Sentiment Analysis, Machine Translation, Text Summarization, Question Answering, Reading Comprehension, Dialog Generation, Text Generation, Paraphrase Generation, Text-to-Speech, Speech Recognition, Speech Synthesis |

| Graph Tasks | Node Classification, Link Prediction, Graph Classification, Graph Generation, Graph Matching, Influence Maximization |

| Decision Making | Decision Making, Policy Learning, Control, Planning, Optimization, Resource Allocation |

| Design Tasks | Experimental Design, Hyperparameter Optimization, Architecture Search, AutoML, Neural Architecture Search |

| Association and Pattern | Association Rule Mining, Pattern Recognition, Motif Discovery |

| Matching and Alignment | Entity Matching, Entity Alignment, Record Linkage, Image Matching |

| Generative Tasks | Data Generation, Data Augmentation, Synthetic Data Generation |

| Causal Tasks | Causal Inference, Treatment Effect Estimation, Counterfactual Reasoning |

📊 Table 2: Models (What to Use) [18 Categories → 102 Specifics]

| Category | Items |

|---|---|

| Linear Models | Linear Model, Polynomial Model, Generalized Linear Model |

| Tree-based Models | Decision Tree, Random Forest, Gradient Boosting Tree, XGBoost, LightGBM, CatBoost |

| Kernel-based Models | Support Vector Machine, Gaussian Process, Radial Basis Function Network |

| Probabilistic Models | Naive Bayes, Bayesian Network, Hidden Markov Model, Markov Random Field, Conditional Random Field, Gaussian Mixture Model, Latent Dirichlet Allocation |

| Basic Neural Networks | Perceptron, Multi-Layer Perceptron, Feedforward Neural Network, Radial Basis Function Network |

| Convolutional Neural Networks | Convolutional Neural Network, LeNet, AlexNet, VGG, ResNet, Inception, DenseNet, MobileNet, EfficientNet, SqueezeNet, ResNeXt, SENet, NASNet, U-Net |

| Recurrent Neural Networks | Recurrent Neural Network, Long Short-Term Memory, Gated Recurrent Unit, Bidirectional RNN, Bidirectional LSTM |

| Transformer Architectures | Transformer, BERT, GPT, T5, Vision Transformer, CLIP, DALL-E, Swin Transformer |

| Attention Mechanisms | Attention Mechanism, Self-Attention Network, Multi-Head Attention, Cross-Attention |

| Graph Neural Networks | Graph Neural Network, Graph Convolutional Network, Graph Attention Network, GraphSAGE, Message Passing Neural Network, Graph Isomorphism Network, Temporal Graph Network |

| Generative Models | Autoencoder, Variational Autoencoder, Generative Adversarial Network, Conditional GAN, Deep Convolutional GAN, StyleGAN, CycleGAN, Diffusion Model, Denoising Diffusion Probabilistic Model, Normalizing Flow |

| Energy-based Models | Boltzmann Machine, Restricted Boltzmann Machine, Hopfield Network |

| Memory Networks | Neural Turing Machine, Memory Network, Differentiable Neural Computer |

| Specialized Architectures | Capsule Network, Siamese Network, Triplet Network, Attention Network, Pointer Network, WaveNet, Seq2Seq, Encoder-Decoder |

| Object Detection Models | YOLO, R-CNN, Fast R-CNN, Faster R-CNN, Mask R-CNN, FPN, RetinaNet |

| Time Series Models | ARIMA Model, SARIMA Model, State Space Model, Temporal Convolutional Network, Prophet |

| Pointer Networks | PointNet, PointNet++ |

| Matrix Factorization | Matrix Factorization, Non-negative Matrix Factorization, Singular Value Decomposition |

🎓 Table 3: Learning Methods (How to Learn) [11 Categories → 82 Specifics]

| Category | Items |

|---|---|

| Basic Learning Paradigms | Supervised Learning, Unsupervised Learning, Semi-Supervised Learning, Self-Supervised Learning, Reinforcement Learning |

| Advanced Learning Paradigms | Transfer Learning, Multi-Task Learning, Meta-Learning, Few-Shot Learning, Zero-Shot Learning, One-Shot Learning, Active Learning, Online Learning, Incremental Learning, Continual Learning, Lifelong Learning, Curriculum Learning |

| Training Strategies | Batch Learning, Mini-Batch Learning, Stochastic Learning, End-to-End Learning, Adversarial Training, Contrastive Learning, Knowledge Distillation, Fine-Tuning, Pre-training, Prompt Learning, In-Context Learning |

| Optimization Methods | Gradient Descent, Stochastic Gradient Descent, Backpropagation, Maximum Likelihood Estimation, Maximum A Posteriori, Expectation-Maximization, Variational Inference, Evolutionary Learning |

| Reinforcement Learning Methods | Q-Learning, Policy Gradient, Value Iteration, Policy Iteration, Temporal Difference Learning, Monte Carlo Learning, Actor-Critic, Model-Free Learning, Model-Based Learning, Inverse Reinforcement Learning, Imitation Learning, Multi-Agent Learning |

| Special Learning Settings | Weakly Supervised Learning, Noisy Label Learning, Positive-Unlabeled Learning, Cost-Sensitive Learning, Imbalanced Learning, Multi-Instance Learning, Multi-View Learning, Co-Training, Self-Training, Pseudo-Labeling |

| Domain and Distribution | Domain Adaptation, Domain Generalization, Covariate Shift Adaptation, Out-of-Distribution Learning |

| Collaborative Learning | Federated Learning, Distributed Learning, Collaborative Learning, Privacy-Preserving Learning |

| Ensemble Methods | Ensemble Learning, Bagging, Boosting, Stacking, Blending |

| Representation Learning | Representation Learning, Feature Learning, Metric Learning, Distance Learning, Embedding Learning, Dictionary Learning, Manifold Learning |

| Learning Modes | Generative Learning, Discriminative Learning, Transductive Learning, Inductive Learning |

📈 Summary of Statistics

📑 ML Infos in 361/405 Papers (Chronological Order)

405. A generative artificial intelligence approach for the discovery of antimicrobial peptides against multidrug-resistant bacteria, Nature Microbiology (October 03, 2025)

| Category | Items |

|---|---|

| Datasets | UniProtKB/Swiss-Prot proteome (non-redundant canonical and isoform sequences), AMP dataset (compiled from public AMP databases), Non-AMP dataset (cytoplasm-filtered sequences), External validation dataset (AMPs and non-AMPs), Toxin and non-toxin dataset, Generated sequences from external unconstrained generation models, Generated non-redundant short-peptide datasets (GNRSPDs) from AMPGenix, Non-redundant short-peptide datasets (NRSPDs) constructed from UniProtKB/Swiss-Prot |

| Models | Transformer, GPT, BERT, Random Forest, Support Vector Machine, Multi-Layer Perceptron, Variational Autoencoder, Generative Adversarial Network |

| Tasks | Language Modeling, Text Generation, Binary Classification, Synthetic Data Generation, Data Generation |

| Learning Methods | Self-Supervised Learning, Transfer Learning, Fine-Tuning, Pre-training, Representation Learning |

| Performance Highlights | model_size_parameters: more than 124 million parameters, pretraining_corpus_size: 609,216 protein sequences (Swiss-Prot), generated_sequences_count: 7,798 (AMPGenix default T=1), AUC_benchmarking_set: 0.97, AUPRC_benchmarking_set: 0.96, AUC_test_set: 0.99, AUPRC_test_set: 0.99, Precision: 90.67%, F1_score: 88.89%, MCC: 81.66%, Specificity: 93.93%, Sensitivity: 87.17%, External_validation_precision: 93.99% on independent external validation dataset, AUC_test_set: 0.93, AUPRC_test_set: 0.92, Precision: 84.79%, F1_score: 85.90%, MCC: 72.08%, Specificity: 85.06%, Sensitivity: 87.04%, positive_prediction_rate_on_classifiers: AMPGenix-generated sequences consistently outperformed ProteoGPT across temperature settings when evaluated by 6 AMP classifiers (higher AMP recognition rate), AMPGenix-T1_uniqueness: 0.97, AMPGenix-T1_diversity: 0.98, AMPGenix-T1_novelty: 0.99, AMPGenix-T1_FCD: 10.21, AMPGenix-T2_FCD: 9.26, AMPGenix-T3_FCD: 9.57, ProteoGPT_T1_FCD: 14.87, Macrel_AUC: 0.91 (benchmarking set), Macrel_precision: 95.95% (from Extended Data Table 1) but low sensitivity (52.28%), AmPEP_AUC: not listed; Extended Data Table 1: Precision 32.60%, F1 39.23%, MCC -19.78%, iAMP_Pred_AUC: 0.86 (benchmarking set), iAMP_Pred_precision: 77.86% (Extended Data Table 1), FCD: 13.45 (Extended Data Table 2), uniqueness/diversity/novelty: reported for PepCVAE in Extended Data Table 2 (diversity and novelty ~0.99), FCD: 11.54 (Extended Data Table 2), diversity/novelty: reported in Extended Data Table 2 |

| Application Domains | Antimicrobial peptide (AMP) discovery, Microbiology / infectious disease (multidrug-resistant bacteria: CRAB, MRSA), Protein sequence modeling, Drug discovery / therapeutic peptide design, Computational biology / bioinformatics (sequence mining and generation) |

404. A comprehensive genetic catalog of human double-strand break repair, Science (October 02, 2025)

| Category | Items |

|---|---|

| Datasets | REPAIRome (this study), Toronto KnockOut CRISPR Library v3 (TKOv3), CloneTrackerXP barcode library experiment (representation/depth determination), AAVS1 endogenous locus cut sites (validation), PCAWG tumor cohort (used for mutational signature association), Processed REPAIRome data and code |

| Models | None |

| Tasks | Clustering, Dimensionality Reduction, Feature Selection, Feature Extraction, Information Retrieval, Pattern Recognition |

| Learning Methods | Unsupervised Learning, Representation Learning, Feature Learning |

| Performance Highlights | selected_genes_count: 168, STRING_PPI_enrichment: < 1e-16, GO_enrichment_DSB_repair_FDR: 2.1e-13 (GO:0006302), GO_enrichment_DNA_repair_FDR: 1.3e-11 (GO:0006281), GO_enrichment_NHEJ_FDR: 2.2e-11 (GO:0006303), selection_criteria: distance > 5; FDR < 0.01; replicate PCC > 0.3, UMAP_visualizations: Displayed distance/insertion-deletion/microhomology/editing-efficiency gradients across genes; highlighted NHEJ cluster around LIG4/XRCC4/POLL, examples_distance_values: HLTF distance = 19.7; some genes >10, cosine_similarity_match: VHL knockout effect vector best matched COSMIC indel signature ID11, ID11_prevalence_in_ccRCC: > 50% prevalence (in ccRCC), statistical_tests: prevalence p < 0.001 (Fisher’s exact test); signature activity p < 0.001 (Wilcoxon test); VHL expression (FPKM) associated with active ID11 p < 0.001, correlation_threshold_for_edges: PCC > 0.45, network_genes_count: 183, STRING_PPI_enrichment_of_POLQ_subnetwork: < 1.0e-16, enriched_complexes_in_POLQ_subnetwork: BTRR (FDR = 6.83e-5), Fanconi anemia pathway (FDR = 7.45e-9), SAGA complex (FDR = 3.20e-8) |

| Application Domains | Molecular Biology, Genomics, DNA double-strand break (DSB) repair, CRISPR-Cas gene editing, Cancer Genomics, Computational Biology / Bioinformatics |

402. Machine learning of charges and long-range interactions from energies and forces, Nature Communications (October 01, 2025)

| Category | Items |

|---|---|

| Datasets | Random point-charge gas (this work), KF aqueous solutions (this work), LODE molecular dimer dataset (charged molecular dimers) (ref. 42 / BFDB), SPICE polar dipeptides subset (ref. 43), 4G-HDNNP benchmark datasets (from Ko et al., ref. 12), Pt(111)/KF(aq) dataset (ref. 49), TiO2(101)/NaCl + NaOH + HCl (aq) dataset (ref. 50), LiCl(001)/GaF3(001) interface (this work / generated via on-the-fly FLARE active sampling), Liquid water dataset (ref. 71) used for MD speed benchmarking |

| Models | Multi-Layer Perceptron, Message Passing Neural Network, Graph Neural Network, Ensemble Learning |

| Tasks | Regression, Regression, Feature Extraction, Representation Learning, Anomaly Detection |

| Learning Methods | Supervised Learning, Active Learning, Ensemble Learning, Representation Learning, Backpropagation |

| Performance Highlights | C10H2/C10H3+energy_RMSE_meV_per_atom: 0.73, C10H2/C10H3+_force_RMSE_meV_per_A: 36.9, Na8=9Cl8_energy_RMSE_meV_per_atom: 0.21, Na8=9Cl8_force_RMSE_meV_per_A: 9.78, Au2-MgO(001)_energy_RMSE_meV_per_atom: 0.073, Au2-MgO(001)_force_RMSE_meV_per_A: 7.91, Pt(111)/KF(aq)_energy_RMSE_meV_per_atom: 0.309, Pt(111)/KF(aq)_force_RMSE_meV_per_A: 34.1, TiO2(101)/NaCl+NaOH+HCl(aq)_energy_RMSE_meV_per_atom: 0.435, TiO2(101)/NaCl+NaOH+HCl(aq)_force_RMSE_meV_per_A: 70.5, LiCl/GaF3_ID_force_RMSE_meV_per_A: 78.8, LiCl/GaF3_ID_force_RMSE_meV_per_A_CACE-LR: 67.8, LiCl/GaF3_OOD_force_RMSE_meV_per_A_SR: 116.3, LiCl/GaF3_OOD_force_RMSE_meV_per_A_LR: 40.5, Random_point_charges_charge_prediction_MAE_with_10_configs_e: nearly zero (nearly exact), KF_aq_energy_MAE_meV_per_atom>=100samples: < 0.3, KF_aq_charge_learning_converged_after~couple_hundred_samples: qualitative, Dipole_R2_vs_DFT_on_polar_dipeptides: 0.991, Dipole_MAE_e-angstrom_LE S: 0.089, MBIS_dipole_MAE_e-angstrom: 0.063, Quadrupole_R2_vs_DFT: 0.911, Charges_R2_vs_M BIS: 0.87, Charges_MAE_vs_MBIS_e: 0.24, BEC_diagonal_R2: 0.976, BEC_offdiagonal_R2: 0.838, MD_SR_max_atoms_single_NVIDIA_L40S_GPU: 40000, MD_LR_max_atoms_single_NVIDIA_L40S_GPU: 13000, LR_overhead_vs_SR: minimal with updated implementation (comparable performance) |

| Application Domains | Atomistic simulations of materials, Computational chemistry / molecular modeling, Electrolyte / electrode interfaces (electrochemistry), Ionic solutions and electric double layers, Charged molecular complexes (binding curves), Solid–solid interfaces and heterostructures, Molecular property prediction (dipole, quadrupole, Born effective charges), Machine-learned interatomic potentials (MLIPs) development |

401. Heat-rechargeable computation in DNA logic circuits and neural networks, Nature (October 01, 2025)

| Category | Items |

|---|---|

| Datasets | MNIST (Modified National Institute of Standards and Technology) database, Custom 9-bit two-memory input patterns (L and T patterns), Custom 100-bit two-memory input patterns, Custom Fibonacci-word input patterns (first 16 elements), Synthetic test patterns used for individual circuit component evaluation (e.g., two-input WTA combinations, thresholds) |

| Models | Feedforward Neural Network, Multi-Layer Perceptron |

| Tasks | Binary Classification, Image Classification, Classification, Logic (interpreted as Boolean logic within provided list: Binary Classification / Multi-class Classification not directly but Boolean operations implemented), Sequence-to-Sequence |

| Learning Methods | Unsupervised Learning, Supervised Learning |

| Performance Highlights | reset_success_rate_annihilators_simulated: 93%, reset_success_rate_summation_gates_simulated: 85%, number_of_distinct_strands_in_system: up to 289 distinct strands; 213 present for tested patterns, reusability_rounds_demonstrated: 10 rounds of sequential tests (experiments) with consistent performance; simulations and experiments closely matched, time_to_reset: heating to 95°C and cooling to 20°C in 1 min (reset protocol), rounds_of_computation: 16 rounds (all possible 4-bit inputs), resets_demonstrated: 15 resets over 640 hours, consistency: maintained consistent performance across 16 rounds, kinetics_difference_before_fix: >10-fold difference in kinetics between two hairpin gates sharing same toehold but differing in long domains, reset_success_rates_for_pair_designs: simulations applied 90% and 86% reset success rates for two gates to explain experiments (Extended Data Fig. 6/7), correct_computation_combinations_tested: six input combinations tested with correct behaviour and preserved after reset, reaction_completion_with_hairpin_downstream: approx. 60% reaction completion at high input concentration, reaction_completion_with_two-stranded_downstream: restored full reaction completion, signal_amplification: 10-fold signal amplification within 2 h for chosen catalyst design, rounds_demonstrated: 10 rounds, reusability: consistent off state over 10 rounds when unique inhibitors used, sensitivity_to_inhibitor_quality: performance decay with universal inhibitor due to 5% effective concentration deviation and increasing leak |

| Application Domains | DNA nanotechnology / molecular programming, Synthetic biology, Molecular computing, Molecular diagnostics (potential application), Programmable molecular machines / autonomous chemical systems, Origin-of-life / prebiotic chemistry (conceptual inspiration for heat stations) |

400. Predictive model for the discovery of sinter-resistant supports for metallic nanoparticle catalysts by interpretable machine learning, Nature Catalysis (September 29, 2025)

| Category | Items |

|---|---|

| Datasets | NN-MD generated Pt NP / metal–oxide support dataset (203 systems), OC22-derived candidate surface set (10,662 DFT-relaxed unary and binary metal–oxide surfaces), iGAM training/test split (177 train / 26 test), Experimental adhesion reference: Pt/MgO(100), Experimental sintering test dataset (Pt NPs on α-Al2O3, CeO2, BaO) |

| Models | Generalized Linear Model, Feedforward Neural Network, Ensemble Learning |

| Tasks | Regression, Data Generation, Feature Selection, Ranking, Feature Extraction |

| Learning Methods | Supervised Learning, Active Learning, Pre-training, Ensemble Learning, Transfer Learning |

| Performance Highlights | MAE(Eadh)_train: 0.15 J m^-2, R2_train: 0.90, MAE(Eadh)_test: 0.19 J m^-2, R2_test: 0.79, MAE(Eadh)_6feature: 0.29 J m^-2, MAE(theta)_6feature: ≈10°, simulated_systems: 203, MD_length_each: 500 ps (5×10^5 time steps of 1 fs), temperature: 800 °C, top4_feature_importance_fraction: >80% |

| Application Domains | heterogeneous catalysis, supported metal nanoparticle catalysts (Pt on metal-oxide supports), materials discovery / high-throughput catalyst screening, computational materials science (DFT + ML + MD), nanocatalyst stability / sintering resistance |

399. InterPLM: discovering interpretable features in protein language models via sparse autoencoders, Nature Methods (September 29, 2025)

| Category | Items |

|---|---|

| Datasets | UniRef50 (5 million random protein sequences), Swiss-Prot (UniprotKB reviewed subset; sampled 50,000 proteins), AlphaFold Database (AFDB-F1-v4), InterPro annotations (used for validation of missing annotations), ESM-2 embeddings (pretrained model outputs) |

| Models | Transformer, Autoencoder, Hidden Markov Model |

| Tasks | Feature Extraction, Clustering, Binary Classification, Dimensionality Reduction, Language Modeling, Text Generation, Regression, Feature Selection, Clustering (feature activation patterns: structural vs sequential) |

| Learning Methods | Self-Supervised Learning, Unsupervised Learning, Pre-training, Transfer Learning, Representation Learning, Supervised Learning |

| Performance Highlights | max_features_with_strong_concept_alignment_in_layer: 2,309 (ESM-2-8M layer 5), features_identified_by_SAE_vs_neurons: SAEs extract 3× the concepts found in 8M ESM neurons and 7× in 650M ESM neurons (summary), expansion_factors: 32× (320→10,240 features for ESM-2-8M), 8× (1,280→10,240 for ESM-2-650M), SAE_feature_max_F1_range_on_ESM-2-650M: 0.95–1.0 (maximum F1 scores observed for SAE features), neuron_max_F1_range: 0.6–0.7 (neurons), concepts_detected_ESM-2-650M_vs_ESM-2-8M: 427 vs 143 concepts (≈1.7× more concepts in 650M subset), example_feature_specificity_f1503_F1: 0.998, other_TBDR_cluster_feature_F1s: 0.793, 0.611, glycine_feature_F1s: 0.995, 0.990, 0.86 (highly glycine-specific features), steering_effects: Steering periodic glycine features increased predicted probability of glycine at both steered and masked positions; effect propagated to multiple subsequent periodic repeats with diminishing intensity (quantitative probability changes shown in Fig. 6; steer amounts up to 2.5× maximum activation), median_Pearson_r_for_LLM_generated_descriptions: 0.72 (median across 1,240 features), example_feature_correlations: 0.83, 0.73, 0.99 (example features shown in Fig. 4), example_confirmation: Independent confirmation of Nudix motif in B2GFH1 via HMM-based InterPro annotation (qualitative validation) |

| Application Domains | protein modeling, protein engineering, computational biology, bioinformatics (protein annotation), model interpretability / mechanistic interpretability, biological discovery (novel motif/domain identification) |

398. SimpleFold: Folding Proteins is Simpler than You Think, Preprint (September 27, 2025)

| Category | Items |

|---|---|

| Datasets | Protein Data Bank (PDB), AFDB SwissProt subset (from AlphaFold Protein Structure Database), AFESM (representative clusters), AFESM-E (extended AFESM), CAMEO22 benchmark, CASP14 benchmark (subset), ATLAS (MD ensemble dataset) |

| Models | Transformer, Attention Mechanism, Multi-Head Attention, Pretrained Transformer (ESM2-3B embeddings) |

| Tasks | Regression, Sequence-to-Sequence, Synthetic Data Generation, Distribution Estimation, Multi-class Classification |

| Learning Methods | Generative Learning, Pre-training, Fine-Tuning, Transfer Learning, Supervised Learning, Representation Learning |

| Performance Highlights | CAMEO22TM-score_mean/median: 0.837 / 0.916, CAMEO22_GDT-TS_mean/median: 0.802 / 0.867, CAMEO22_LDDT_mean/median: 0.773 / 0.802, CAMEO22_LDDT-Cα_mean/median: 0.852 / 0.884, CAMEO22_RMSD_mean/median: 4.225 / 2.175, CASP14_TM-score_mean/median: 0.720 / 0.792, CASP14_GDT-TS_mean/median: 0.639 / 0.703, CASP14_LDDT_mean/median: 0.666 / 0.709, CASP14_LDDT-Cα_mean/median: 0.747 / 0.829, CASP14_RMSD_mean/median: 7.732 / 3.923, SimpleFold-100M_CAMEO22_TM-score_mean/median: 0.803 / 0.878, SimpleFold-360M_CAMEO22_TM-score_mean/median: 0.826 / 0.905, SimpleFold-700M_CAMEO22_TM-score_mean/median: 0.829 / 0.915, SimpleFold-1.1B_CAMEO22_TM-score_mean/median: 0.833 / 0.924, SimpleFold-1.6B_CAMEO22_TM-score_mean/median: 0.835 / 0.916, SimpleFold-100M_CASP14_TM-score_mean/median: 0.611 / 0.628, SimpleFold-360M_CASP14_TM-score_mean/median: 0.674 / 0.758, SimpleFold-700M_CASP14_TM-score_mean/median: 0.680 / 0.767, SimpleFold-1.1B_CASP14_TM-score_mean/median: 0.697 / 0.796, SimpleFold-1.6B_CASP14_TM-score_mean/median: 0.712 / 0.801, Pairwise_RMSD_r_no_tuning: 0.44, Global_RMSF_r_no_tuning: 0.45, Per_target_RMSF_r_no_tuning: 0.60, RMWD_no_tuning: 4.22, MD_PCA_W2_no_tuning: 1.62, Joint_PCA_W2_no_tuning: 2.59, %PC_sim>0.5no_tuning: 28, Weak_contacts_J_no_tuning: 0.36, Transient_contacts_J_no_tuning: 0.27, Exposed_residue_J_no_tuning: 0.39, Exposed_MI_matrix_rho_no_tuning: 0.24, Pairwise_RMSD_r_tuned_SF-MD-3B: 0.45, Global_RMSF_r_tuned_SF-MD-3B: 0.48, Per_target_RMSF_r_tuned_SF-MD-3B: 0.67, RMWD_tuned_SF-MD-3B: 4.17, MD_PCA_W2_tuned_SF-MD-3B: 1.34, Joint_PCA_W2_tuned_SF-MD-3B: 2.18, %PC_sim>0.5_tuned_SF-MD-3B: 38, Weak_contacts_J_tuned_SF-MD-3B: 0.56, Transient_contacts_J_tuned_SF-MD-3B: 0.34, Exposed_residue_J_tuned_SF-MD-3B: 0.60, Exposed_MI_matrix_rho_tuned_SF-MD-3B: 0.32, SimpleFold-3B_Apo/holo_res_flex_global: 0.639, SimpleFold-3B_Apo/holo_res_flex_per-target_mean/median: 0.550 / 0.552, SimpleFold-3B_Apo/holo_TM-ens_mean/median: 0.893 / 0.916, SimpleFold-3B_Fold-switch_res_flex_global: 0.292, SimpleFold-3B_Fold-switch_res_flex_per-target_mean/median: 0.288 / 0.263, SimpleFold-3B_Fold-switch_TM-ens_mean/median: 0.734 / 0.766, pLDDT_vs_LDDT-Cα_Pearson_correlation: 0.77, SimpleFold-3B_inference_time_200steps_seq256_s: 15.6, SimpleFold-3B_inference_time_200steps_seq512_s: 27.8, SimpleFold-3B_inference_time_500steps_seq256_s: 37.2, SimpleFold-100M_inference_time_200steps_seq256_s: 3.8 |

| Application Domains | Protein structure prediction / computational structural biology, Molecular dynamics ensemble generation / protein flexibility modeling, De novo protein design / protein generation, Drug discovery (ensemble observables and cryptic pocket identification), Generative modeling for scientific domains (analogy to text-to-image / text-to-3D) |

397. Design of a potent interleukin-21 mimic for cancer immunotherapy, Science Immunology (September 26, 2025)

| Category | Items |

|---|---|

| Datasets | PDB structures (hIL-21/hIL-21R: PDB 3TGX; native hIL-21 complex PDB 8ENT; hγc complex PDB 7S2R; 21h10 complex PDB 9E2T), Computational design candidate set (Rosetta-generated designs), MC38 syngeneic murine tumor model (MC38 adenocarcinoma), B16F10 murine melanoma model (with adoptive TRP1high/low T cell transfer), LCMV-infected mice (virus-specific CD8 T cell analysis), PDOTS (patient-derived organotypic tumor spheroids) from advanced melanoma patients, Bulk RNA-seq (murine CD8 T cells treated in vitro), Single-cell RNA-seq (scRNA-seq) of tumor-infiltrating CD45+ cells, Crystallography diffraction data (21h10/hIL-21R/hγc complex) |

| Models | Message Passing Neural Network, Graph Neural Network, Other (non-ML computational tools) |

| Tasks | Synthetic Data Generation, Clustering, Dimensionality Reduction, Feature Extraction, Data Generation, Image Classification |

| Learning Methods | Supervised Learning, Generative Learning, Unsupervised Learning |

| Performance Highlights | functional_outcome: Generated variant 21AT36 binds IL-21R but not γc; antagonist did not induce STAT phosphorylation, context_readouts: 21AT36 did not induce STAT phosphorylation in murine CD8 T cells; in MC38 tumors an equimolar dose of 21AT36 did not show antitumor activity (Fig. 2F and 2G), design_pool_size: 185 Rosetta designs generated and filtered; downstream selection and mutagenesis led to 21h10, structural_validation: Crystal structure of 21h10 complex solved (PDB ID: 9E2T) with resolution between 2.3 and 3.4 Å, single_cell_input: ≈4000 cells per tumor; 10 mice (PBS, 21h10) or 5 mice (Neo-2/15, mIL-21) per group pooled; 50,000 read pairs per cell sequencing depth, biological_findings: Identification of multiple immune and nonimmune clusters; 21h10 expanded highly activated CD8 T cells and TRP1low tumor-specific T cells and decreased Treg frequency, design_to-function: 21h10 showed STAT1/STAT3 phosphorylation potency equivalent to native hIL-21 and mIL-21 in both human and murine cells; 21h10 elicits similar gene-expression profile at 100 pM compared with 1 nM mIL-21, thermal_stability: 21h10 melting temperature (Tm) ≈ 75°C |

| Application Domains | cancer immunotherapy, computational protein design / de novo protein design, structural biology (X-ray crystallography), immunology (T cell biology, cytokine signaling), single-cell transcriptomics / tumor microenvironment profiling, ex vivo functional profiling of human tumors (PDOTS) |

396. EpiAgent: foundation model for single-cell epigenomics, Nature Methods (September 25, 2025)

| Category | Items |

|---|---|

| Datasets | Human-scATAC-Corpus, Buenrostro2018, Kanemaru2023, Li2023b, Ameen2022, Li2023a, Lee2023, Zhang2021, Pierce2021, Liscovitch-Brauer2021, Long et al. (ccRCC single-cell multi-omics), 10x Genomics single-cell multi-omics human brain dataset |

| Models | Transformer, Attention Mechanism, Multi-Head Attention, Graph Neural Network, Multi-Layer Perceptron, Feedforward Neural Network, Convolutional Neural Network, Support Vector Machine, Transformer, Graph Neural Network |

| Tasks | Feature Extraction, Dimensionality Reduction, Multi-class Classification, Data Generation, Treatment Effect Estimation, Counterfactual Reasoning, Domain Adaptation, Zero-Shot Learning, Clustering |

| Learning Methods | Pre-training, Fine-Tuning, Self-Supervised Learning, Supervised Learning, Transfer Learning, Zero-Shot Learning, Domain Adaptation, Representation Learning, Graph Neural Network |

| Performance Highlights | NMI: higher than all six baseline methods after fine-tuning (exact numeric values reported in Supplementary materials), ARI: higher than all six baseline methods after fine-tuning (exact numeric values reported in Supplementary materials), accuracy_improvement_vs_second_best: 11.036% (average), macro_F1_improvement_vs_second_best: 21.549% (average), NMI_improvement_over_raw: 11.123% (average), ARI_improvement_over_raw: 18.605% (average), Pearson_correlation_median: >0.8 (between imputed signals and average raw signals of corresponding cell types), R2_top_1000_DA_cCREs: >0.7, direction_accuracy_top_100_DA_cCREs: >90% (average across cell types), Wasserstein_distance: lower than baselines (better alignment of predicted and real perturbed cell distributions), Pearson_correlation_vs_GEARS_on_Pierce2021: EpiAgent Pearson correlation average 24.177% higher than GEARS, direction_accuracy_top_DA_cCREs: EpiAgent significantly higher than GEARS (GEARS near random), NMI/ARI/kBET/iLISI: EpiAgent achieves best overall performance vs baselines on clustering and batch-correction metrics (exact numeric values in Fig.4e and Supplementary Figs.), embedding_quality: Zero-shot EpiAgent competitive with baselines on datasets with similar cell populations to pretraining corpus (notably Li2023b), clustering_and_metrics: visual and metric superiority reported (UMAP separation; NMI/ARI comparisons in Fig.2b,c), EpiAgent-B_accuracy: >0.88, EpiAgent-NT_accuracy: >0.95, balanced_accuracy_and_macro_F1: >0.8 (for per-cell-type performance), Wasserstein_distance_change_significance: one-sided t-tests yield P values < 0.05 for majority of perturbations (Liscovitch-Brauer2021 dataset), directional_shift_in_synthetic_ccRCC_experiment: knockouts (EGLN3, ABCC1, VEGFA, Group) shift synthetic cells away from cancer-proportion profiles (quantified by average change in proportion of cancer cell-derived cCREs; exact numeric values in Fig.5g) |

| Application Domains | single-cell epigenomics (scATAC-seq), cell type annotation and atlas construction (human brain, normal tissues), hematopoiesis and developmental trajectory analysis, cardiac niches and heart tissues, stem cell differentiation, cancer epigenetics (clear cell renal cell carcinoma), perturbation response prediction (drug stimulation, CRISPR knockouts), batch correction and multi-dataset integration |

395. Activation entropy of dislocation glide in body-centered cubic metals from atomistic simulations, Nature Communications (September 24, 2025)

| Category | Items |

|---|---|

| Datasets | Fe and W MLIP training datasets (extended from refs. 23, 26), PAFI sampling datasets (finite-temperature sampled configurations along reaction coordinates), Empirical potential (EAM) reference calculations, Experimental yield stress datasets (from literature) |

| Models | Machine-Learning Interatomic Potentials (MLIP), Embedded Atom Method (EAM) potentials |

| Tasks | Regression, Data Generation, Image Classification |

| Learning Methods | Supervised Learning, Transfer Learning |

| Performance Highlights | activation_entropy_harmonic_regime_Fe: ΔS(z2) = 6.3 kB, activation_entropy_difference_above_T0_Fe: ΔS(z2)-ΔS(z1) = 1.6 kB, activation_entropy_harmonic_regime_W: approx. 8 kB, MD_velocity_prefactor_fit_HTST: ν = 3.8×10^9 Hz (HTST fit), MD_velocity_prefactor_fit_VHTST: ν = 9.2×10^10 Hz (variational HTST fit), simulation_cell_size: 96,000 atoms (per atomistic simulation cell), PAFI_computational_cost_per_condition: 5×10^4 to 2.5×10^5 CPU hours (for anharmonic Gibbs energy calculations), Hessian_diagonalization_cost: ≈5×10^4 CPU-hours per atomic system using MLIP, effective_entropy_variation_range_Fe_EAM: ΔSeff varies by ~10 kB between 0 and 700 MPa (Fe, EAM), departure_from_harmonicity_temperature: marked departure from harmonic prediction above ~20 K (Fe, EAM), inverse_Meyer_Neldel_TMNs: Fe: TMN = -406 K (effective fit), W: TMN = -1078 K (effective fit) |

| Application Domains | materials science, computational materials / atomistic simulation, solid mechanics / metallurgy (dislocation glide & yield stress in BCC metals), physics of defects (dislocations, kink-pair nucleation) |

394. Design of facilitated dissociation enables timing of cytokine signalling, Nature (September 24, 2025)

| Category | Items |

|---|---|

| Datasets | PDB structures (design models and solved crystal structures; accession codes 9DCX, 9DCY, 9DCZ, 9DD0, 9DD1, 9DD2, 9DD3, 9DD4, 9DD5, 9OLQ), Designs and analysis code, sequences and source data (Zenodo deposit), Single-molecule tracking (SMT) raw data (Zenodo DOIs: multiple entries), RNA-seq raw data (BioProject PRJNA1302552), SKEMPI database (referenced), Reference genome and gene sets (GRCh38, MSigDB Hallmark gene sets) |

| Models | Diffusion Model |

| Tasks | Clustering, Dimensionality Reduction, Synthetic Data Generation |

| Learning Methods | Unsupervised Learning |

| Performance Highlights | AF2_predicted_RMSD_to_crystal_Cα: <= 1.0 Å, designs_tested_initial_pipeline: 24 designs tested; multiple working designs obtained on first attempt, MD_simulation_length_per_trajectory: 1 μs (triplicate trajectories), agreement_with_DEER_distance_distributions: MD-simulated distance distributions span experimental DEER distribution (qualitative agreement) |

| Application Domains | De novo protein design / computational protein engineering, Structural biology (X-ray crystallography, DEER spectroscopy), Biophysics (kinetic design and measurement of protein–protein interactions), Synthetic biology / biosensor design (rapid luciferase sensors), Immunology / cytokine signalling (design and temporal control of IL-2 mimics), Single-molecule microscopy (live-cell receptor dimerization dynamics), Molecular simulation (MD, integrative modelling) |

393. Tailoring polymer electrolyte solvation for 600 Wh kg−1 lithium batteries, Nature (September 24, 2025)

| Category | Items |

|---|---|

| Datasets | Electrochemical cycling data (coin cells), Electrochemical cycling data (anode-free pouch cells), Materials characterization datasets (NMR, Raman, XPS, TOF-SIMS, TEM, SEM, DSC, GITT, EIS, DEMS), DFT calculation data |

| Models | None |

| Tasks | Experimental Design, Optimization, Data Generation |

| Learning Methods | None |

| Performance Highlights | None |

| Application Domains | Battery materials, Solid-state batteries, Lithium metal batteries (Li-rich Mn-based layered oxide cathodes), Electrochemistry, Energy storage, Materials science / polymer electrolytes, Computational chemistry (DFT) |

392. EDBench: Large-Scale Electron Density Data for Molecular Modeling, Preprint (September 24, 2025)

| Category | Items |

|---|---|

| Datasets | EDBench, ED5-EC, ED5-OE, ED5-MM, ED5-OCS, ED5-MER, ED5-EDP, PCQM4Mv2, Referenced QC datasets (QM7, QM9, QM7-X, PubChemQC, MD17, MD22, WS22, QH9, MultiXC-QM9, MP, ECD, QMugs, ∇2DFT, QM9-VASP, Materials Project) |

| Models | Transformer, Multi-Layer Perceptron, Graph Neural Network |

| Tasks | Regression, Binary Classification, Information Retrieval, Density Estimation, Data Generation |

| Learning Methods | Supervised Learning, Contrastive Learning, Pre-training, Fine-Tuning, Representation Learning, End-to-End Learning |

| Performance Highlights | E1_MAE: 243.49 ± 74.72, E2_MAE: 325.65 ± 160.17, E3_MAE: 858.77 ± 496.74, E4_MAE: 389.24 ± 217.51, E5_MAE: 17.54 ± 10.85, E6_MAE: 243.49 ± 74.73, E1_MAE: 190.77 ± 1.98, E2_MAE: 109.21 ± 2.82, E3_MAE: 369.88 ± 1.34, E4_MAE: 150.05 ± 0.27, E5_MAE: 8.13 ± 0.51, E6_MAE: 190.77 ± 1.98, HOMO-2_MAE_x100: 1.73 ± 0.01, HOMO-1_MAE_x100: 1.68 ± 0.01, HOMO-0_MAE_x100: 1.92 ± 0.01, LUMO+0_MAE_x100: 3.08 ± 0.05, LUMO+1_MAE_x100: 2.86 ± 0.05, LUMO+2_MAE_x100: 3.05 ± 0.02, LUMO+3_MAE_x100: 3.01 ± 0.02, HOMO-2_MAE_x100: 1.75 ± 0.02, HOMO-1_MAE_x100: 1.72 ± 0.02, HOMO-0_MAE_x100: 1.98 ± 0.00, LUMO+0_MAE_x100: 3.21 ± 0.01, LUMO+1_MAE_x100: 3.02 ± 0.02, LUMO+2_MAE_x100: 3.25 ± 0.04, LUMO+3_MAE_x100: 3.20 ± 0.03, Dipole_X_MAE: 0.9123 ± 0.0203, Dipole_Y_MAE: 0.9605 ± 0.0053, Dipole_Z_MAE: 0.7540 ± 0.0068, Magnitude_MAE: 0.7397 ± 0.0467, Dipole_X_MAE: 0.8818 ± 0.0010, Dipole_Y_MAE: 0.9427 ± 0.0008, Dipole_Z_MAE: 0.7416 ± 0.0023, Magnitude_MAE: 0.6820 ± 0.0005, Accuracy: 55.57 ± 2.14, ROC-AUC: 55.97 ± 5.17, AUPR: 57.62 ± 3.91, F1-Score: 66.96 ± 2.08, Accuracy: 57.65 ± 0.18, ROC-AUC: 60.48 ± 0.38, AUPR: 61.54 ± 0.31, F1-Score: 61.41 ± 1.02, GeoFormer + PointVector_ED→MS_Top-1: 17.67 ± 2.10, GeoFormer + PointVector_ED→MS_Top-3: 46.09 ± 4.53, GeoFormer + PointVector_ED→MS_Top-5: 67.63 ± 5.92, GeoFormer + PointVector_MS→ED_Top-1: 27.01 ± 1.69, GeoFormer + PointVector_MS→ED_Top-3: 59.02 ± 2.49, GeoFormer + PointVector_MS→ED_Top-5: 77.42 ± 3.01, GeoFormer + X-3D_ED→MS_Top-1: 68.32 ± 3.70, GeoFormer + X-3D_ED→MS_Top-3: 92.18 ± 2.41, GeoFormer + X-3D_ED→MS_Top-5: 97.31 ± 1.29, GeoFormer + X-3D_MS→ED_Top-1: 70.01 ± 2.93, GeoFormer + X-3D_MS→ED_Top-3: 92.08 ± 2.01, GeoFormer + X-3D_MS→ED_Top-5: 97.17 ± 0.92, EquiformerV2 + PointVector_ED→MS_Top-1: 10.24 ± 1.28, EquiformerV2 + PointVector_ED→MS_Top-3: 32.47 ± 2.69, EquiformerV2 + PointVector_ED→MS_Top-5: 53.42 ± 2.67, EquiformerV2 + PointVector_MS→ED_Top-1: 22.18 ± 0.64, EquiformerV2 + PointVector_MS→ED_Top-3: 54.61 ± 2.89, EquiformerV2 + PointVector_MS→ED_Top-5: 76.83 ± 2.90, EquiformerV2 + X-3D_ED→MS_Top-1: 78.71 ± 0.69, EquiformerV2 + X-3D_ED→MS_Top-3: 94.78 ± 0.40, EquiformerV2 + X-3D_ED→MS_Top-5: 98.13 ± 0.07, EquiformerV2 + X-3D_MS→ED_Top-1: 78.36 ± 0.65, EquiformerV2 + X-3D_MS→ED_Top-3: 94.19 ± 0.14, EquiformerV2 + X-3D_MS→ED_Top-5: 97.74 ± 0.29, ρτ=0.1_MAE: 0.3362 ± 0.2900, ρτ=0.1_Pearson(%): 81.0 ± 8.1, ρτ=0.1_Spearman(%): 56.4 ± 13.7, ρτ=0.1_Time_sec_per_mol: 0.024, ρτ=0.15_MAE: 0.0463 ± 0.0157, ρτ=0.15_Pearson(%): 98.0 ± 6.3, ρτ=0.15_Spearman(%): 87.0 ± 2.7, ρτ=0.15_Time_sec_per_mol: 0.015, ρτ=0.2_MAE: 0.0448 ± 0.0133, ρτ=0.2_Pearson(%): 99.2 ± 0.8, ρτ=0.2_Spearman(%): 91.0 ± 9.1, ρτ=0.2_Time_sec_per_mol: 0.013, DFT_Time_sec_per_mol_for_comparison: 245.8, ED5-EDP_MAE: 0.018 ± 0.003, ED5-EDP_Pearson: 0.993 ± 0.004, ED5-EDP_Spearman: 0.381 ± 0.162, EDMaterial-EDP_MAE: 0.118 ± 0.029, EDMaterial-EDP_Pearson: 0.918 ± 0.034, EDMaterial-EDP_Spearman: 0.633 ± 0.115, X-3D_original_HOMO-2_MAE_x100: 1.75 ± 0.02, X-3D (full)_HOMO-2_MAE_x100: 1.5797, X-3D_original_HOMO-1_MAE_x100: 1.72 ± 0.02, X-3D (full)_HOMO-1_MAE_x100: 1.6359, X-3D_original_HOMO-0_MAE_x100: 1.98 ± 0.00, X-3D (full)_HOMO-0_MAE_x100: 1.9104, X-3D_original_LUMO+0_MAE_x100: 3.21 ± 0.01, X-3D (full)_LUMO+0_MAE_x100: 2.9981, X-3D_original_LUMO+1_MAE_x100: 3.02 ± 0.02, X-3D (full)_LUMO+1_MAE_x100: 2.7028, X-3D_original_LUMO+2_MAE_x100: 3.25 ± 0.04, X-3D (full)_LUMO+2_MAE_x100: 2.8725, X-3D_original_LUMO+3_MAE_x100: 3.20 ± 0.03, X-3D (full)_LUMO+3_MAE_x100: 2.8708, DFT_E1_MAE: 224.13 ± 43.47, DFT_E2_MAE: 155.85 ± 28.75, DFT_E3_MAE: 451.59 ± 58.53, DFT_E4_MAE: 190.47 ± 25.62, DFT_E5_MAE: 9.57 ± 1.56, DFT_E6_MAE: 224.13 ± 43.47, DFT_Mean: 209.29, HGEGNN(2024)_Mean: 186.38, HGEGNN(2025)_Mean: 196.11, HGEGNN(2026)_Mean: 182.75, ED5-OE_xi=2048_mean_MAE_x100: 2.48, ED5-OE_xi=512_mean_MAE_x100: 2.56, ED5-OE_xi=1024_mean_MAE_x100: 2.75, ED5-OE_xi=4096_mean_MAE_x100: 2.70, ED5-OE_xi=8192_mean_MAE_x100: 2.60 |

| Application Domains | Molecular modeling, Quantum chemistry, Machine-learning force fields (MLFFs), Drug discovery / virtual screening, Materials science (periodic systems / crystalline solids), High-throughput quantum-aware modeling |

391. Active Learning for Machine Learning Driven Molecular Dynamics, Preprint (September 21, 2025)

| Category | Items |

|---|---|

| Datasets | Chignolin protein (in-house benchmark suite) |

| Models | Graph Neural Network |

| Tasks | Regression, Data Generation, Dimensionality Reduction, Distribution Estimation |

| Learning Methods | Active Learning, Supervised Learning |

| Performance Highlights | TICA_W1_before: 1.15023, TICA_W1_after: 0.77003, TICA_W1_percent_change: -33.05%, Bond_length_W1_before: 0.00043, Bond_length_W1_after: 0.00022, Bond_length_W1_percent_change: -48.84%, Bond_angle_W1_before: 0.11036, Bond_angle_W1_after: 0.10148, Bond_angle_W1_percent_change: -8.05%, Dihedral_W1_before: 0.25472, Dihedral_W1_after: 0.36378, Reaction_coordinate_W1_before: 0.15141, Reaction_coordinate_W1_after: 0.38302, loss_function: mean-squared error (MSE) between predicted CG forces and projected AA forces (force matching), W1_TICA_after_active_learning: 0.77003 |

| Application Domains | Molecular Dynamics, Protein conformational modeling, Coarse-grained simulations for biomolecules, ML-driven drug discovery / computational biophysics |

390. 3D multi-omic mapping of whole nondiseased human fallopian tubes at cellular resolution reveals a large incidence of ovarian cancer precursors, Preprint (September 21, 2025)

| Category | Items |

|---|---|

| Datasets | nPOD donor fallopian tube cohort (this paper), Visium Cytassist spatial transcriptomics data (ROIs from donor tubes), CODEX multiplexed imaging dataset (25-marker panel), SRS-HSI spatial metabolomics ROIs, Derived/processed imaging dataset (H&E + IHC stacks) |

| Models | Convolutional Neural Network, Convolutional Neural Network, Clustering (unsupervised) |

| Tasks | Structured Prediction, Object Detection, Clustering, Dimensionality Reduction, Clustering, Dimensionality Reduction, Feature Extraction |

| Learning Methods | Supervised Learning, Transfer Learning, Fine-Tuning, Unsupervised Learning, Dimensionality Reduction |

| Performance Highlights | tissue_segmentation_accuracy: 95.2%, epithelial_subtyping_accuracy: 93.2%, nuclei_segmentations_extracted: 2.19 billion, images_processed_for_nuclei: 2,452 H&E-stained images, auto_highlighted_p53_Ki67_locations: 1,285 (mean 257, median 211 per fallopian tube), pathologist_validated_STICs: 99 STICs identified (13 proliferatively active STICs, 86 proliferative dormant STICs) and 11 p53 signatures across 5 donors, SRS_HSI_PCA_kmeans_cluster_finding: distinct metabolite clusters separating lesion vs control ROIs (qualitative), CODEX_clusters: 30 unsupervised clusters combined into 19 annotated cell phenotypes, single_cell_count: 972,276 cells segmented for CODEX WSI, PAGA_connectivity_insights: identified interactions linking TAMs, regulatory DCs, activated T cells and CD8+ memory T cells; STIC cells associated with proliferating epithelial cells (qualitative topology results) |

| Application Domains | Histopathology / Digital Pathology, Oncology (ovarian cancer precursor detection and characterization), Spatial multi-omics integration (spatial proteomics, spatial transcriptomics, spatial metabolomics), Medical image analysis (3D reconstruction and registration) |

389. De novo Design of All-atom Biomolecular Interactions with RFdiffusion3, Preprint (September 18, 2025)

| Category | Items |

|---|---|

| Datasets | Protein Data Bank (PDB) - all complexes deposited through December 2024, AlphaFold2 (AF2) distillation structures (Hsu et al.), Atomic Motif Enzyme (AME) benchmark, Binder design benchmark targets (PD-L1, InsulinR, IL-7Ra, Tie2, IL-2Ra), DNA binder evaluation targets (PDB IDs: 7RTE, 7N5U, 7M5W), Small-molecule binding benchmark (four molecules: FAD, SAM, IAI, OQO), Experimental enzyme design screening set (esterase / cysteine hydrolase designs), Experimental DNA-binding designs |

| Models | Denoising Diffusion Probabilistic Model, Transformer, U-Net, Attention Mechanism, Cross-Attention |

| Tasks | Synthetic Data Generation, Data Generation, Clustering, Sequence-to-Sequence |

| Learning Methods | Self-Supervised Learning, Pre-training, Fine-Tuning |

| Performance Highlights | inference_speedup_vs_RFD2: approximately 10x, parameters: 168M trainable parameters (RFD3) vs ~350M for AF3, unconditional_refolding_rate: 98% of designs have at least one sequence predicted by AF3 to fold within 1.5 Å RMSD (out of 8 ProteinMPNN sequences), diversity_example: 41 clusters out of 96 generations between length 100-250 (TM-score cutoff 0.5), binder_unique_successful_clusters_RFD3avg: 8.2 (average unique successful clusters per target, TM-score clustering threshold 0.6), binder_unique_successful_clusters_RFD1_avg: 1.4 (comparison), DNA_monomer_pass_rate<5Å_DNA-aligned_RMSD: 8.67%, DNA_dimer_pass_rate_<5Å_DNA-aligned_RMSD: 6.67%, DNA_monomer_pass_rate_interface_fixed_after_LigandMPNN: 6.5%, DNA_dimer_pass_rate_interface_fixed_after_LigandMPNN: 5.5%, small_molecule_binder_success_criteria: AF3: backbone RMSD ≤ 1.5 Å; backbone-aligned ligand RMSD ≤ 5 Å; Interface min PAE ≤ 1.5; ipTM ≥ 0.8, small_molecule_result_summary: RFD3 significantly outperforms RFdiffusionAA across the four tested molecules; RFD3 designs are more diverse, novel relative to training set, and have lower Rosetta ∆∆G binding energies (no single-number aggregates reported in main text), AME_win_count: RFD3 outperforms RFD2 on 37 of 41 cases (90%), AME_residue_islands_>4pass_rate_RFD3: 15%, AME_residue_islands>4_pass_rate_RFD2: 4%, experimental_DNA_binder_screen_results: 5 designs synthesized; 1 bound with EC50 = 5.89 ± 2.15 μM (yeast surface display), experimental_enzyme_screen_results: 190 designs screened; 35 multi-turnover designs observed; best enzyme Kcat/Km = 3557 |

| Application Domains | de novo protein design (generative biomolecular design), protein-protein binder design, protein-DNA binder design, protein-small molecule binder design, enzyme active site scaffolding and enzyme design, symmetric oligomer design, biomolecular modeling and structural prediction (evaluation with AlphaFold3) |

388. DeepSeek-R1 incentivizes reasoning in LLMs through reinforcement learning, Nature (September 17, 2025)

| Category | Items |

|---|---|

| Datasets | AIME 2024, MMLU, MMLU-Redux, MMLU-Pro, DROP, C-Eval, IF-Eval (IFEval), FRAMES, GPQA Diamond, SimpleQA / C-SimpleQA, CLUEWSC, AlpacaEval 2.0, Arena-Hard, SWE-bench Verified, Aider-Polyglot, LiveCodeBench, Codeforces, CNMO 2024 (Chinese National High School Mathematics Olympiad), MATH-500, Cold-start conversational dataset (paper-curated), Preference pairs for helpful reward model, Safety dataset for safety reward model, Released RL prompts and rejection-sampling data samples |

| Models | Transformer, Attention Mechanism |

| Tasks | Question Answering, Reading Comprehension, Multi-class Classification, Text Generation, Sequence-to-Sequence, Information Retrieval |

| Learning Methods | Reinforcement Learning, Supervised Learning, Fine-Tuning, Knowledge Distillation, Policy Gradient |

| Performance Highlights | AIME 2024 (pass@1): 77.9%, AIME 2024 (self-consistency cons@16): 86.7% (with self-consistency decoding), AIME training start baseline (pass@1): 15.6% (initial during RL trajectory), English MMLU (EM): 90.8, MMLU-Redux (EM): 92.9, MMLU-Pro (EM): 84.0, DROP (3-shot F1): 92.2, IF-Eval (Prompt Strict): 83.3, GPQA Diamond (Pass@1): 71.5, SimpleQA (Correct): 30.1, FRAMES (Acc.): 82.5, AlpacaEval 2.0 (LC-winrate): 87.6, Arena-Hard (vs GPT-4-1106): 92.3, Code LiveCodeBench (Pass@1-COT): 65.9, Codeforces (Percentile): 96.3, Codeforces (Rating): 2,029, SWE-bench Verified (Resolved): 49.2, Aider-Polyglot (Acc.): 53.3, AIME 2024 (Pass@1): 79.8, MATH-500 (Pass@1): 97.3, CNMO 2024 (Pass@1): 78.8, CLUEWSC (EM): 92.8, C-Eval (EM): 91.8, C-SimpleQA (Correct): 63.7, Code LiveCodeBench (Pass@1-COT): 63.5, Codeforces (Percentile): 90.5, AIME 2024 (Pass@1): 74.0, MATH-500 (Pass@1): 95.9, CNMO 2024 (Pass@1): 73.9, helpful preference pairs curated: 66,000 pairs, safety annotations curated: 106,000 prompts, qualitative statement: Distilled models “exhibit strong reasoning capabilities, surpassing the performance of their original instruction-tuned counterparts.” |

| Application Domains | Mathematics (math competitions, AIME, CNMO, MATH-500), Computer programming / Software engineering (Codeforces, LiveCodeBench, LiveCodeBench, SWE-bench Verified), Biology (graduate-level problems), Physics (graduate-level problems), Chemistry (graduate-level problems), Instruction following / conversational AI (IF-Eval, AlpacaEval, Arena-Hard), Information retrieval / retrieval-augmented generation (FRAMES), Safety and alignment evaluation (safety datasets, reward models) |

386. Modeling-Making-Modulating High-Entropy Alloy with Activated Water-Dissociation Centers for Superior Electrocatalysis, Journal of the American Chemical Society (September 17, 2025)

| Category | Items |

|---|---|

| Datasets | DFT adsorption dataset for PtPdRhRuMo HEA (this work), Open Catalyst Project pretrained graph neural networks (OC20/OC22), Predicted composition screening set (CatBoost evaluations) |

| Models | CatBoost, Linear Model, Support Vector Machine, Random Forest, Gradient Boosting Tree, XGBoost, Multi-Layer Perceptron, Graph Neural Network, Graph Convolutional Network |

| Tasks | Regression, Hyperparameter Optimization, Feature Selection, Optimization, Representation Learning |

| Learning Methods | Supervised Learning, Pre-training, Transfer Learning, Representation Learning |

| Performance Highlights | MAE_train_eV: ∼0.03, MAE_test_eV: ∼0.07, MAE_test_eV: < 0.1 |

| Application Domains | Electrocatalysis, Methanol Oxidation Reaction (MOR), Catalyst design for energy conversion, High-Entropy Alloy (HEA) materials discovery, Computational materials science (DFT + ML integration) |

385. Learning the natural history of human disease with generative transformers, Nature (September 17, 2025)

| Category | Items |

|---|---|

| Datasets | UK Biobank (first-occurrence disease data), Danish national registries (Danish National Patient Registry, Danish Register of Causes of Death), Delphi-2M-sampled synthetic dataset |

| Models | Transformer, GPT, BERT, Linear Model, Encoder-Decoder |

| Tasks | Language Modeling, Multi-class Classification, Binary Classification, Survival Analysis, Time Series Forecasting, Data Generation, Representation Learning |

| Learning Methods | Self-Supervised Learning, Supervised Learning, Transfer Learning, Stochastic Learning, Backpropagation, Maximum Likelihood Estimation, Representation Learning, End-to-End Learning |

| Performance Highlights | average age–sex-stratified AUC (internal validation, next-token prediction, averaged across diagnoses): ≈0.76, AUC (death, age-stratified, internal validation): 0.97, AUC (long-term, 10 years horizon average): 0.70 (average AUC decreases from ~0.76 to 0.70 after 10 years), calibration: Predicted rates closely match observed counts in calibration analyses in 5-year age brackets (qualitative, shown in Extended Data Fig. 3), time-to-event prediction accuracy (aggregate): Model provides consistent estimates of inter-event times (Fig. 1g and methods describe log-likelihood for exponential waiting times), synthetic-trained-model AUC (age–sex-stratified average on observed validation data): 0.74 (trained exclusively on Delphi-2M synthetic data; ~3 percentage points lower than original Delphi-2M), fraction of correctly predicted disease tokens in year 1 of sampling: 17% (compared with 12–13% using sex and age alone), fraction correct after 20 years: <14%, Dementia AUC (Transformer baseline): 0.79, Death AUC (Transformer baseline): 0.78, CVD AUC (Transformer baseline shown alongside others): 0.69 (Transformer as listed in Fig. 2f) |

| Application Domains | Population-scale human disease progression modeling, Epidemiology / public health planning (disease burden projection), Clinical risk prediction (CVD, dementia, diabetes, death, and >1,000 ICD-10 diagnoses), Synthetic data generation for privacy-preserving biomedical model training, Explainable AI for healthcare (embedding/SHAP-based interpretability), Precision medicine / individualized prognostication |

384. Discovery of Unstable Singularities, Preprint (September 17, 2025)

| Category | Items |

|---|---|

| Datasets | Córdoba-Córdoba-Fontelos (CCF) model collocation data (synthetic, generated in self-similar coordinates), Incompressible Porous Media (IPM) with boundary collocation data (synthetic, generated in self-similar coordinates), 2D Boussinesq (with boundary) collocation data (synthetic, generated in self-similar coordinates) |

| Models | Multi-Layer Perceptron, Feedforward Neural Network |

| Tasks | Regression, Optimization, Hyperparameter Optimization |

| Learning Methods | Self-Supervised Learning, Multi-Stage Training, Backpropagation, Gradient Descent, Stochastic Learning, Mini-Batch Learning |

| Performance Highlights | CCF stable log10(max residual): -13.714, CCF 1st unstable log10(max residual): -13.589, CCF 2nd unstable log10(max residual): -6.664, IPM stable log10(max residual): -11.183, IPM 1st unstable log10(max residual): -10.510, IPM 2nd unstable log10(max residual): -8.101, IPM 3rd unstable log10(max residual): -7.526, Boussinesq stable log10(max residual): -8.178, Boussinesq 1st unstable log10(max residual): -8.038, Boussinesq 2nd unstable log10(max residual): -7.772, Boussinesq 3rd unstable log10(max residual): -7.558, Boussinesq 4th unstable log10(max residual): -7.020, CCF stable residual (order): O(10^-13), CCF 1st unstable residual (order): O(10^-13), IPM stable residual (order): O(10^-11), IPM 1st unstable residual (order): O(10^-10), convergence to O(10^-8) with GN: ≈50k iterations (~3 A100 GPU hours), λ for CCF 1st unstable (from literature and this work agreement): λ1 ≈ 0.6057 (Wang et al.); reproduced/improved here, λ for CCF 2nd unstable (this work): λ2 = 0.4703 (text) |

| Application Domains | Mathematical fluid dynamics, Partial differential equations (PDEs) / numerical analysis, Singularity formation and mathematical physics, Computer-assisted proofs (rigorous numerics) |

382. A Generative Foundation Model for Antibody Design, Preprint (September 16, 2025)

| Category | Items |

|---|---|

| Datasets | SAbDab (training set up to 2022-12-31), SAb23H2 / SAb-23H2-Ab (test set), SAb-23H2-Nano (nanobody test set), PPSM pre-training corpora (UniRef50, PDB multimers, PPI, OAS antibody pairs), IgDesign test set (used for inverse design benchmarking), PD-L1 experimental de novo design dataset (wet-lab candidates), Case-study experimental targets (datasets of antigens used in experiments) |

| Models | Denoising Diffusion Probabilistic Model, Diffusion Model, Transformer, Attention Mechanism, Self-Attention Network, Multi-Head Attention, Graph Neural Network |

| Tasks | Structured Prediction, Sequence-to-Sequence, Data Generation, Optimization, Ranking, Regression |

| Learning Methods | Pre-training, Fine-Tuning, Self-Supervised Learning, Curriculum Learning, Transfer Learning, Representation Learning, Fine-Tuning (task-specific) + Frequency-based selection (screening) |

| Performance Highlights | TM-Score: 0.9591, lDDT: 0.8956, RMSD (antibody): 2.1997 Å, DockQ: 0.2986, iRMS: 6.2195 Å, LRMS: 19.4888 Å, Success Rate (SR, DockQ>0.23): 0.4667, AAR (CDR L2): median ≈ 0.8 (Fig.2f described); (Table B4 AAR values for IgGM: L1 0.750, L2 0.743, L3 0.635, H1 0.740, H2 0.644, H3 0.360), Protein A binding (VHH3 variants): VHH3-4M KD = 387 nM; VHH3-5M KD = 384 nM; VHH3-WT no binding (KD >10,000 nM), Humanization (mouse→human) KD comparisons: Mouse KD = 0.120 nM; Human-1 KD = 0.171 nM; Human-2 KD = 0.195 nM; Human-3 KD = 0.395 nM; Human-4 KD = 0.486 nM; Human-5 KD = 0.139 nM, VH-Humanness Score (average): IgGM designed average 0.909 vs original murine 0.676 (text); CDR3 RMSD: IgGM 0.750 Å vs BioPhi 0.983 Å, Structural preservation backbone RMSD: average backbone RMSD reported as 1.10 ± 0.06 Å, I7 (anti-IL-33) KD improvement: Original KD = 52.02 nM → Best variant KD = 9.753 nM (5.3-fold improvement), I7 EC50 improvements (first round): [‘Mutants M1, M7, M10 achieved 4–6× increase in affinity (ELISA)’], Broadly neutralizing R1-32 variants (SARS-CoV-2 RBD) KD changes: Q61E enabled binding to Lambda and BQ.1.1 from no binding (examples: Q61E-Lambda KD 948 nM; Q61E-BQ.1.1 KD 948 nM), subsequent N58D,Q61E improved BQ.1.1 KD to 107 nM (~9–10× improvement compared to earlier), PD-L1 de novo design success rate: 7/60 candidates had nanomolar or picomolar KD (success rate 7/60 ≈ 11.7%), Top de novo binder KD range: 0.084 nM (D1) to 2.89 nM (D7), Example D1 KD: 0.084 nM; D1 IC50 = 7.29 nM; D1 displaced PD-1 from PD-L1 in competition assays, Ablation impact (w/o PPSM): Comparative ablation: w/o PPSM AAR 0.322 vs full IgGM AAR 0.360; DockQ 0.233 vs 0.246; SR 0.426 vs 0.433 (Table B5), Contextual effect: PPSM improves interface bias and sequence recovery marginally |

| Application Domains | Antibody engineering / design, Structural biology (protein structure prediction and docking), Therapeutic antibody discovery (immuno-oncology PD-L1, anti-viral SARS-CoV-2), Protein engineering (framework optimization, humanization), Computational biology / bioinformatics (protein sequence-structure co-design) |

381. MSnLib: efficient generation of open multi-stage fragmentation mass spectral libraries, Nature Methods (September 15, 2025)

| Category | Items |

|---|---|

| Datasets | MSnLib (this work), MCEBIO library (subset used in analyses), NIH NPAC ACONN (NIHNP), OTAVAPEP (peptidomimetic library), ENAMDISC (Discovery Diverse Set DDS-10 from Enamine), ENAMMOL (Enamine + Molport mixture, incl. carboxylic acid fragment library), MCESCAF (MCE 5K Scaffold Library), MCEDRUG (FDA-approved drugs subset from MCE), Evaluation dataset: drug-incubated bacterial cultures (MSV000096589), Public spectral libraries (comparison references) |

| Models | None |

| Tasks | Classification, Clustering, Dimensionality Reduction, Feature Extraction, Information Retrieval |

| Learning Methods | Supervised Learning, Unsupervised Learning |

| Performance Highlights | None |

| Application Domains | clinical metabolomics, natural product discovery, exposomics, untargeted liquid chromatography–mass spectrometry (LC–MS) annotation, microbial metabolite analysis / metabolomics |

380. Spatial gene expression at single-cell resolution from histology using deep learning with GHIST, Nature Methods (September 15, 2025)

| Category | Items |

|---|---|

| Datasets | BreastCancer1 (10x Xenium), BreastCancer2 (10x Xenium), LungAdenocarcinoma (10x Xenium), Melanoma (10x Xenium), BreastCancerILC and BreastCancerIDC (10x Xenium), HER2ST spatial transcriptomics dataset, NuCLS dataset, TCGA-BRCA (The Cancer Genome Atlas - Breast Invasive Carcinoma), Mixed DCIS cohort (in-house), Single-cell reference datasets (breast, melanoma, lung) |

| Models | U-Net, Convolutional Neural Network, DenseNet, VGG, ResNet, Transformer, Graph Neural Network, Multi-Head Attention, Cross-Attention, Encoder-Decoder, Multi-Layer Perceptron, Linear Model |

| Tasks | Regression, Image-to-Image Translation, Semantic Segmentation, Multi-class Classification, Weakly Supervised Learning, Survival Analysis, Clustering, Feature Extraction |

| Learning Methods | Multitask Learning, Supervised Learning, Weakly Supervised Learning, End-to-End Learning, Pre-training, Self-Supervised Learning, Transfer Learning, Gradient Descent |

| Performance Highlights | cell-type_accuracy_BreastCancer1: 0.75, cell-type_accuracy_BreastCancer2: 0.66, median_PCC_top20_SVGs: 0.7, median_PCC_top50_SVGs: 0.6, gene_corr_SCD: 0.74, gene_corr_FASN: 0.77, gene_corr_FOXA1: 0.8, gene_corr_EPCAM: 0.84, melanoma_celltype_proportion_corr: 0.92, lung_adenocarcinoma_celltype_proportion_corr: 0.97, PCC_all_genes: 0.16, SSIM_all_genes: 0.1, PCC_HVGs: 0.2, PCC_SVGs: 0.27, SSIM_HVGs: 0.17, SSIM_SVGs: 0.26, RMSE_all_genes: 0.2, RMSE_SVGs: 0.22, top_gene_correlations: {‘GNAS’: 0.42, ‘FASN’: 0.42, ‘SCD’: 0.34, ‘MYL12B’: 0.32, ‘CLDN4’: 0.32}, C-index_GHIST: 0.57, C-index_RNASeq_STgene_baseline: 0.55, Kaplan_Meier_logrank_P: 0.017, PCC_all_genes: 0.14, SSIM_all_genes: 0.08, PCC_all_genes: 0.11, SSIM_all_genes: 0.07 |

| Application Domains | Histopathology (H&E imaging), Spatial transcriptomics (subcellular and spot-based SRT), Cancer (breast cancer, HER2+ subtype, luminal cohort), Lung adenocarcinoma, Melanoma, Multi-omics integration (TCGA multi-omics), Biomarker discovery and survival prognosis |

378. Bridging histology and spatial gene expression across scales, Nature Methods (September 15, 2025)

| Category | Items |

|---|---|

| Datasets | 10x Xenium, 10x Visium, The Cancer Genome Atlas (TCGA) H&E slides, Various cancer datasets (breast cancer, lung adenocarcinoma, melanoma), Gastric cancer samples (used with iSCALE), Multiple sclerosis brain tissue (used with iSCALE), Single-cell RNA-sequencing reference atlas, Subcellular-resolution spatial transcriptomics data (general) |

| Models | Multi-Layer Perceptron, Convolutional Neural Network, Encoder-Decoder, Graph Neural Network |

| Tasks | Regression, Multi-Task Learning, Image Super-Resolution, Multi-class Classification, Semantic Segmentation, Synthetic Data Generation |

| Learning Methods | Multi-Task Learning, Supervised Learning, Unsupervised Learning, Transfer Learning |

| Performance Highlights | None |

| Application Domains | Spatial transcriptomics, Histopathology / H&E image analysis, Cancer biology (breast cancer, lung adenocarcinoma, melanoma, gastric cancer), Neuropathology (multiple sclerosis brain tissue), Biobanks and archived clinical cohorts (e.g., TCGA), Digital spatial omics and tissue-wide molecular mapping |

377. Structural Insights into Autophagy in the AlphaFold Era, Journal of Molecular Biology (September 15, 2025)

| Category | Items |

|---|---|

| Datasets | None |

| Models | None |

| Tasks | None |

| Learning Methods | None |

| Performance Highlights | None |

| Application Domains | Autophagy research, Structural biology / protein structure prediction, Molecular biology, Biophysics, Therapeutic/drug discovery (rational drug design) |

376. Scaling up spatial transcriptomics for large-sized tissues: uncovering cellular-level tissue architecture beyond conventional platforms with iSCALE, Nature Methods (September 15, 2025)

| Category | Items |

|---|---|

| Datasets | Gastric cancer Xenium sample - BS06-9313-8_Tumor (Tumor), Gastric Xenium sample - Normal 1 (Gastric Patient 1, Normal 1), Gastric Xenium sample - Normal 2 (Gastric Patient 2, Normal 2), Human large-sized MS brain sample - MS330-AL (MS Sample 1), Human large-sized MS brain sample - MS330-CAL (MS Sample 2), Pseudo-Visium daughter captures (simulated from Xenium full-slide data) |

| Models | Multi-Layer Perceptron, Vision Transformer |

| Tasks | Regression, Semantic Segmentation, Multi-class Classification, Clustering, Feature Extraction, Dimensionality Reduction |

| Learning Methods | Weakly Supervised Learning, Supervised Learning, Transfer Learning, Out-of-Distribution Learning, Mini-Batch Learning, Gradient Descent, End-to-End Learning |

| Performance Highlights | alignment_accuracy: 99% (semiautomatic alignment algorithm accuracy for daughter captures), RMSE: iSCALE-Seq outperformed iStar across RMSE (displayed in Fig. 3a; lower is better), SSIM: iSCALE-Seq outperformed iStar across SSIM (displayed in Fig. 3a; higher is better), Pearson_correlation: iSCALE-Seq achieved higher Pearson correlations vs iStar; ~50% of genes achieved r > 0.45 at 32 µm resolution for iSCALE-Seq; example per-gene r values shown (e.g., iSCALE-Seq r = 0.5037 for one gene in Fig. 3c), adjusted_Rand_index: 0.74 (segmentation result from out-of-sample predictions closely aligns with in-sample segmentation; reported when comparing segmentations in normal gastric out-of-sample experiment), RMSE: iSCALE-Img achieves low RMSE in in-sample evaluations; comparable performance to iSCALE-Seq (Fig. 3a), SSIM: High SSIM relative to competing methods (Fig. 3a), Pearson_correlation: iSCALE-Img had generally low Pearson at superpixel level but improved with larger superpixel sizes; example reported correlations improved with resolution, Spearman_correlation: Out-of-sample predictions: at 64 µm resolution ≈50% of genes achieved Spearman r > 0.45; overall Spearman reported across resolutions (Fig. 4c), chi_squared_concordance: 99 of the top 100 HVGs exhibited significantly concordant out-of-sample predicted expression patterns vs ground truth (chi-squared statistic with Bonferroni correction), adjusted_Rand_index: 0.74 (segmentation agreement between out-of-sample and in-sample segmentations in Normal gastric data) |

| Application Domains | Spatial transcriptomics, Histopathology / digital pathology (H&E image analysis), Oncology (gastric cancer tissue analysis), Neurology / Multiple sclerosis brain tissue analysis, Single-cell and spatial genomics integration |

375. Integrating diverse experimental information to assist protein complex structure prediction by GRASP, Nature Methods (September 15, 2025)

| Category | Items |

|---|---|

| Datasets | PSP dataset, Self-curated benchmark dataset (contact RPR and IR), Simulated XL dataset (SDA XL simulations), Experimental XL dataset (real-world XL cases), CL dataset (covalent labeling), CSP dataset (chemical shift perturbation), Simulated DMS (BM5.5) dataset, Hitawala–Gray simulated DMS dataset, Experimental DMS dataset (SARS-CoV-2 RBD antibodies), Mitochondria in situ XL-MS dataset |

| Models | Transformer, Attention Mechanism, Self-Attention Network, Multi-Head Attention, Ensemble (ensemble prediction from multiple checkpoints) |

| Tasks | Link Prediction, Ranking, Clustering |

| Learning Methods | Fine-Tuning, Pre-training, Transfer Learning, Ensemble Learning, Supervised Learning |

| Performance Highlights | benchmark_mean_DockQ_with_2contact_RPRs: 0.35, benchmark_success_rate>0.23_with_2_contact_RPRs: 52.7%, IRs_mean_DockQ_for_4_10_20_restraints: 0.24 / 0.34 / 0.41, IRs_success_rate_for_4_10_20_restraints: 35.3% / 51.9% / 63.2%, AFM_without_restraints_mean_DockQ: 0.17, AF3_without_restraints_mean_DockQ: 0.23, simulated_XL_mean_DockQ_1%: 0.18, simulated_XL_mean_DockQ_2%: 0.21, simulated_XL_mean_DockQ_5%: 0.27, HADDOCK_mean_DockQ_1%_2%_5%: 0.06 / 0.08 / 0.10, AlphaLink_mean_DockQ_1%_2%_5%: 0.12 / 0.13 / 0.20, restraint_satisfaction_median_at_2%_coverage_all_correct: 69% (all) / 81% (correct restraints), iterative_noise_filtering_effect: improved DockQ and pLDDT across coverage levels, experimental_XL_mean_DockQ_for_9_samples: 0.48 (GRASP) vs 0.31 (AFM) vs 0.38 (AlphaLink) vs 0.05 (ClusPro) vs 0.05 (HADDOCK), example_4G3Y_DockQ: GRASP 0.77 vs AFM 0.03; AlphaLink 0.67; HADDOCK 0.02, CL_average_DockQ_GRASP: 0.58, CL_average_DockQ_AFM: 0.45, ColabDock_average_DockQ: 0.56, example_4INS8_DockQ: GRASP 0.56 vs AFM 0.43 vs HADDOCK 0.18 vs ClusPro 0.06 vs ColabDock 0.33, CSP_average_DockQ_GRASP: 0.81 (4 cases), CSP_average_DockQ_AF M/HADDOCK/ClusPro/ColabDock: AFM 0.39 / HADDOCK 0.28 / ClusPro 0.21 / ColabDock 0.5, example_4G6M_DockQ_GRASP: 0.9, example_4G6J_DockQ_GRASP: 0.79, BM5.5_median_DockQ_GRASP: 0.64, BM5.5_success_rate_GRASP: 71.6%, Hitawala–Gray_median_DockQ_antibodies_GRASP: 0.477, Hitawala–Gray_median_DockQ_nanobodies_GRASP: 0.541, Hitawala–Gray_success_rate_antibodies_GRASP: 60.0%, Hitawala–Gray_success_rate_nanobodies_GRASP: 88.8%, AF3_second_best_median_DockQ_antibodies_AF3: 0.069, AF3_second_best_median_DockQ_nanobodies_AF3: 0.237, Experimental_DMS_median_DockQ_GRASP: 0.25, Experimental_DMS_success_rate_GRASP: 53.6%, Experimental_DMS_median_DockQ_AF3: 0.07, Experimental_DMS_success_rate_AF3: 39.3%, Combined_protocol_median_DockQ: 0.28, Combined_protocol_success_rate: 56.0%, mitochondria_predicted_PPIs_total: 144 PPIs predicted (121 had pLDDT > 75), XLs_satisfied_GRASP: 140/144 predicted PPIs satisfied XLs, XLs_satisfied_AFM: 31/144, median_TM_score_on_17_ground_truth_pairs_GRASP: 0.881, median_TM_score_on_17_ground_truth_pairs_AFM: 0.838, pLDDT_Pearson_correlation_with_DockQ: r = 0.39, pLDDT_Pearson_correlation_with_TM_score: r = 0.65, pLDDT_Pearson_correlation_with_LDDT: r = 0.87, improvements_in_pLDDT_vs_gains_in_DockQ_TM_LDDT: r = 0.38 (DockQ), 0.58 (TM), 0.77 (LDDT) |

| Application Domains | protein complex structure prediction / structural biology, antigen–antibody modelling and antibody design / immunotherapy, integrative structural biology (integrating XL-MS, CL, CSP, DMS, cryo-EM, NMR PRE, mutagenesis), in situ interactome modelling (mitochondrial PPI mapping), computational docking and restrained docking workflows |

374. De novo discovery of conserved gene clusters in microbial genomes with Spacedust, Nature Methods (September 15, 2025)

| Category | Items |

|---|---|

| Datasets | 1,308 representative bacterial genomes (reference database), Gold-standard BGC dataset (nine complete genomes), GTDB (Genome Taxonomy Database), AlphaFold structure database (and other structure DBs: PDB, ESMAtlas), PADLOC antiviral defense annotations, AntiSMASH functional annotation (version 8) |

| Models | T5, Transformer, Hidden Markov Model |

| Tasks | Clustering, Information Retrieval, Feature Extraction, Classification |

| Learning Methods | Representation Learning, Pre-training |

| Performance Highlights | AUC (precision–recall) i,i+1: 0.93, AUC (precision–recall) i,i+2: 0.93, AUC (precision–recall) i,i+3: 0.86, AUC (precision–recall) i,i+4: 0.81, AUC (precision–recall) i,i+1: 0.91, AUC (precision–recall) i,i+2: 0.89, AUC (precision–recall) i,i+3: 0.83, AUC (precision–recall) i,i+4: 0.77, PADLOC multi-gene defense clusters (reference): 5,520, Spacedust recovery of PADLOC clusters: 5,255 (95%), Spacedust full-length matches: 4,888 (93% of recovered clusters), Spacedust partial matches: 367 (7% of recovered clusters), Non-redundant clusters (paired matches grouped): 72,483 nonredundant clusters comprising 2.45M genes (58% of dataset), All pairwise cluster hits: 321.2M cluster hits in 106.6M cluster matches; mean genes per cluster match = 3.01, Spacedust assignment: 58% of all 4.2M genes assigned to conserved gene clusters; 35% of genes without any annotation assigned to clusters, Average F1 score (Spacedust): 0.61, Average F1 score (ClusterFinder): 0.44, Average F1 score (DeepBGC): 0.39, Average F1 score (GECCO): 0.43 |

| Application Domains | Microbial genomics (bacteria and archaea), Metagenomics / metagenome-assembled genomes, Microbiome research (environmental and human-associated microbiomes), Comparative genomics / evolutionary genomics (gene neighborhood conservation), Functional annotation of genes (operons, antiviral defense systems, biosynthetic gene clusters), CRISPR–Cas systems discovery (e.g., expansion of subtype III-E), Biosynthetic gene cluster discovery and natural product genome mining |

372. Guided multi-agent AI invents highly accurate, uncertainty-aware transcriptomic aging clocks, Preprint (September 12, 2025)

| Category | Items |

|---|---|

| Datasets | ARCHS4, ARCHS4 — blood subset, ARCHS4 — colon subset, ARCHS4 — lung subset, ARCHS4 — ileum subset, ARCHS4 — heart subset, ARCHS4 — adipose subset, ARCHS4 — retina subset |

| Models | XGBoost, LightGBM, Support Vector Machine, Linear Model, Transformer |

| Tasks | Regression, Feature Selection, Feature Extraction, Clustering |

| Learning Methods | Supervised Learning, Ensemble Learning, Imbalanced Learning |

| Performance Highlights | R2: 0.619, R2: 0.604, R2: 0.574, R2_Ridge: 0.539, R2_ElasticNet: 0.310, R2: 0.957, MAE_years: 3.7, R2_all: 0.726, MAE_all_years: 6.17, R2_confidence_weighted: 0.854, MAE_confidence_weighted_years: 4.26, mean_calibration_error: 0.7%, R2_per_window_range: ≈0.68–0.74, lung_R2: 0.969, blood_R2: 0.958, ileum_R2: 0.958, heart_R2: 0.910, adipose_R2: 0.887, retina_R2: 0.594 |

| Application Domains | aging biology / geroscience, transcriptomics, biomarker discovery, computational biology / bioinformatics, clinical biomarker development (biological age clocks), AI-assisted scientific discovery (multi-agent workflows) |

371. Flexynesis: A deep learning toolkit for bulk multi-omics data integration for precision oncology and beyond, Nature Communications (September 12, 2025)

| Category | Items |

|---|---|

| Datasets | CCLE, GDSC2, TCGA (multiple cohorts: pan-cancer, COAD, ESCA, PAAD, READ, STAD, UCEC, UCS, LGG, GBM), METABRIC, DepMap, ProtTrans protein sequence embeddings (precomputed), describePROT features, STRING interaction networks, METABRIC (as dataset entry repeated for clarity), Single-cell CITE-Seq of bone marrow, PRISM drug screening and CRISPR screens (DepMap components) |

| Models | Multi-Layer Perceptron, Feedforward Neural Network, Variational Autoencoder, Triplet Network, Graph Neural Network, Graph Convolutional Network, Support Vector Machine, Random Forest, XGBoost, Random Survival Forest, Graph Attention Network |

| Tasks | Regression, Classification, Survival, Clustering, Dimensionality Reduction, Feature Selection, Representation Learning |

| Learning Methods | Supervised Learning, Unsupervised Learning, Multi-Task Learning, Transfer Learning, Fine-Tuning, Hyperparameter Optimization, Contrastive Learning, Representation Learning, Domain Adaptation |

| Performance Highlights | Pearson_correlation_Lapatinib: r = 0.6 (p = 7.750175e-42), Pearson_correlation_Selumetinib: r = 0.61 (p = 3.873949e-50), AUC_MSI_prediction: AUC = 0.981, logrank_p: p = 9.94475168880626e-10, per-cell-line_correlation_distribution: median correlations shown per cell line (N=1064) with improvement when adding ProtTrans embeddings; exact medians not specified numerically in text, cross-domain_TCGA->CCLE_before_finetuning_F1: approx. 0.16, cross-domain_TCGA->CCLE_after_finetuning_F1: up to 0.8 |

| Application Domains | Precision oncology / cancer genomics, Pharmacogenomics (drug response prediction), Clinical genomics (survival prediction, biomarker discovery), Functional genomics (gene essentiality prediction, DepMap analyses), Multi-omics data integration (transcriptome, methylome, CNV, mutation), Proteomics / protein sequence analysis (ProtTrans embeddings, describePROT), Single-cell multi-omics (CITE-Seq cell type classification proof-of-concept), Bioinformatics tool development and benchmarking |

370. Biophysics-based protein language models for protein engineering, Nature Methods (September 11, 2025)

| Category | Items |

|---|---|

| Datasets | Rosetta simulated pretraining data (METL-Local), Rosetta simulated pretraining data (METL-Global), GFP (green fluorescent protein) experimental dataset, DLG4-Abundance (DLG4-A) experimental dataset, DLG4-Binding (DLG4-B) experimental dataset, GB1 experimental dataset, GRB2-Abundance (GRB2-A) experimental dataset, GRB2-Binding (GRB2-B) experimental dataset, Pab1 experimental dataset, PTEN-Abundance (PTEN-A) experimental dataset, PTEN-Activity (PTEN-E) experimental dataset, TEM-1 experimental dataset, Ube4b experimental dataset, METL Rosetta datasets (archived) |

| Models | Transformer, Linear Model, Convolutional Neural Network, Feedforward Neural Network, Attention Mechanism, Multi-Head Attention |

| Tasks | Regression, Synthetic Data Generation, Data Augmentation, Optimization, Representation Learning |

| Learning Methods | Pre-training, Fine-Tuning, Supervised Learning, Self-Supervised Learning, Transfer Learning, Feature Extraction, Zero-Shot Learning, Representation Learning |

| Performance Highlights | mean_Spearman_correlation: 0.91, in_distribution_mean_Spearman: 0.85, out_of_distribution_mean_Spearman: 0.16, mutation_extrapolation_avg_Spearman_range: ~0.70-0.78, ProteinNPT_Spearman: 0.65, METL-Local_Spearman: 0.59, supervised_models_avg_Spearman: >0.75, ProteinNPT_avg_Spearman: 0.67, typical_Spearman: <0.3, GB1_supervised_models_Spearman: >=0.55, GB1_METL-Local_METL-Global_Spearman: >0.7, METL-Bind_median_Spearman: 0.94, METL-Local_median_Spearman: 0.93, Linear_median_Spearman: 0.92, Regime_METL-Bind_Spearman: 0.76, Regime_METL-Local_Spearman: 0.74, Regime_Linear_Spearman: 0.56, designed_variants_with_measurable_fluorescence: 16/20, Observed_5-mutants_success_rate: 5/5 (100%), Observed_10-mutants_success_rate: 5/5 (100%), Unobserved_5-mutants_success_rate: 4/5 (80%), Unobserved_10-mutants_success_rate: 2/5 (40%), competitive_on_small_N: Linear-EVE and Linear sometimes competitive with METL-Local on small training sets (dataset dependent), general_performance: CNN baselines were generally outperformed by METL-Local; specific numbers in Supplementary Fig.7 |

| Application Domains | Protein engineering, Biophysics / molecular simulation, Computational protein design, Structural biology, Enzyme engineering (catalysis), Fluorescent protein engineering (GFP brightness), Stability and expression prediction |

369. Towards agentic science for advancing scientific discovery, Nature Machine Intelligence (September 10, 2025)

| Category | Items |

|---|---|

| Datasets | AFMBench (autonomous microscopy benchmark), METR (benchmark for long-horizon multi-step tasks), Crystallographic databases (generic structured resources), Gene ontologies (as structured resources), Chemical reaction networks (structured representations) |

| Models | Transformer, Attention Mechanism, Multi-Head Attention, Multi-Layer Perceptron |

| Tasks | Experimental Design, Novelty Detection, Text Generation, Language Modeling, Sequence-to-Sequence, Graph Generation, Question Answering, Novelty Detection |

| Learning Methods | Active Learning, Transfer Learning, Self-Supervised Learning, Reinforcement Learning, Fine-Tuning, Supervised Learning |

| Performance Highlights | qualitative_outcome: struggles / compounds errors over time, qualitative_outcome: revealed critical failure modes |

| Application Domains | Chemistry, Materials Science, Microscopy / Laboratory Automation, Crystallography / Materials Characterization, Biology / Genomics (via gene ontologies), Clinical domains (clinical diagnosis mentioned as boundary condition), Social Sciences (noted as challenging domain), Autonomous Laboratories / Robotics-enabled synthesis |